Under The Hood - Part III - Lilly's Saga - The stones of Evergreen

Table of Contents

Part III: Details and low level

This part contains details that would just clutter the big picture if included in previous chapters.

Technical vision or pillars for the development

The idea at the outset was that I was going to make an engine. Flexible at first, but it was going to be quite specialized towards the particular game as the development progressed. Reuse of code (as in an engine that could support multiple games) was not a target. It was deemed as too costly for both memory requirements and optimization wise. If reuse in succeeding projects would be wanted, it had to be done by hand.

Gamecode and gameplay features should be implemented in C for as long as possible, and be moved over to assembly as late in the process as possible (when the feature had been tested well, and deemed fun and required in the game). This would enable quick turnarounds.

Parts of the code were expected to be optimized heavily. Readability, cleanness and reusability, was given a lower priority.

Memory, pages and module dependencies

The first prototypes of the game were made in a DOS-environment. That gave you RAM enabled in all four pages from the outset. This works for a while, but becomes really clunky when you start running out of space. At the time, I was just getting into SDCC as well, so everything that had to do with memory involved a lot of digging. SDCC would normally compile all code starting at 0x0100. I needed to place code in different pages or at different locations in memory. By understanding the CRT-files (“C-RunTime”) I figured out how to do that and I could start to compile different modules that I placed at various locations in page 0, 1 and 3. Modules like GAME (the main game), VRAMPUMP (initially fill VRAM with graphics), ASSERT (assert-code resident in high mem area), and so on. The problem then, was the variables in RAM. The modules had their own data and symbols. Some of the variables needed to be shared.

Two things sorted my issues. First, I found out how to make all modules share the same memory and symbols. Second, I researched cartridge/ROM format and decided to go for that.

A note on SDCC __banked

Late into the development of Lilly it was brought to my attention that there is some kind of support for megarom-development in SDCC: The __banked keyword. An example can be found on github. This seems to be the right and elegant way to do megaroms and calls across the 64 kB address space. In the Lilly game it wasn’t used, but for future projects this is something I will look into

Shared memory

To enable having many modules (each compiled separately) sharing the same memory, I had to change my CRT-files, my makefiles and my C-files. SDCC is built around a strict separation between RAM and ROM. I knew that I would always have RAM in page 3, so every module is compiled with --data-loc 0xC000. This means that the modules’ RAM areas will clash. But this does not matter if each module’s data structure is identical. I solved this by putting all variables into a common file, non-const-variables.c, and then, as part of every module’s make-file, I compiled this c-file into a .rel file, which I link into the final file. In turn, this means that every module is disallowed to have its own non-const variables, but will use the extern keyword for every variable used from non-const-variables.c instead.

So, in a way, the symbols aren’t really shared, it’s just that every module has generated the same symbols and the memory location is shared. This also results in a massive amount of global variables which are accessible to any of the modules. –Totally the opposite of any object-oriented paradigm. So be it.

In addition, I have removed the INITIALIZER blocks/code normally found in CRTs / supported by the linker. This data/code is often a bit bloated, and would otherwise just mess up. I do not initialize any of the variables via a declaration, I have moved that to an init-function in one of the modules instead.

As for the equivalent of using the heap in C, I made a sizable block (unsigned char[]) inside the non-const-variables-file for that, and made my own malloc-like functions to get pointers to new objects. Originally I put quite some effort into making creating and deleting objects fast and optimized, but it still turned out to be too slow to do this kind of operations during one frame that had extreme time constraints. So, even if I have a heap, all objects are created at level start. This would not have scaled if the levels were many times bigger of course, but works for this game.

Space used for variables goes from 0xC000 to 0xDB9C. In total 0x1B9C bytes (7068). 0x8B0 bytes (2224) of this is heap memory.

Going cartridge-format

I was intrigued by the cartridge format. Promising zero loading time sounded fantastic to a cycle-fanatic. The possibility of a so-called megarom (i.e. 128kB or higher) was also very exciting.

First thing was to figure out how a cartridge starts. I found all the information I needed on the fantastic resource MSX Resource Center, MRC (msx.org). The most complicated part arose when my need was to, also, use page 0 for my own purpose. I wanted to have my own code in the whole 64 kB address space. The BIOS-calls that provided swapping slots and segments in the various pages resided in page 0. This makes it complicated to use BIOS. The solution I therefore went for, was to make a game without using BIOS. In turn, this makes you have to make the memory management routines yourself. When new to this part of the MSX technology, you will find the technology complicated and based upon a suboptimal solution. But I figured it out, and I believe the ram search and current slot handling mechanism is working on any MSX implementation made to date.

You need a mapper type

16 kB segments seemed to me as the best fit for my code and data, so I chose an ASCII16-mapper. According to this mapper page on MRC it is stated that these mappers have mirrors in page 0 (“8000h~BFFFh (mirror: 0000h~3FFFh)”) - which is something I really wanted. But the page also (unfortunately) states “Pages mirrors are not present on many cartridges.” In turn, at the time of development, there was no emulation of this mirroring in openMSX either, so I had to do without. Lilly’s Saga has RAM in page 2 99% of the time, and therefore using this page for controlling/mirror ROM in page 0 would have been great. Instead I had to make a solution that puts RAM in slot 0 and copy 16 kB code from ROM and into this RAM. It works, but it eats cycles and is therefore undesirable. Using RAM does have one advantage though – you have the possibility to make use of optimization tricks like self-modifying code.

|

| The final memory layout. |

As we can see, the game does not use memory above 0xF380. As BIOS-use was scrapped anyway, this area could be a solution for more memory should there be a need.

Dependencies – calling other modules

The theory is that, if you want to call a function in a segment that is not currently mapped into the main memory / address space, you need to have an idea of which page it will reside in to be able to call it. You then need to switch this segment into this page. When doing this switch, you also need to make sure that the PC (Program Counter) is currently in a different page.

In our case, page 3 is RAM and is static so having a “trampoline” function here, which can swap modules in page 0, 1 and 2 is the idea. For example: If your gamecode (GAME in the drawing above) in page 1 needs a sound effect (playSfx(id)), a call to the SFX module (also in page 1) must be performed. The GAME should call a “trampoline” function in ORCHESTRATE which in turn switches in SFX into page 1, calls playSfx(id) in SFX, puts GAME back in page 1 and returns to GAME.

To enable such a call, ORCHESTRATE needs to know the address and the signature of the function to call in SFX, and GAME needs to know the address and signature to call in ORCHESTRATE. I’m certainly no expert in C, but fiddling around with symbol files, typedefs and header files, I found something that worked.

First I need to post-process the symbol file that is made available after a module has been built. In ORCHESTRATE I have chosen to expose any function that starts with “API_”. So, using python I generate header-files like this:

|

| Defines as input files to the other header-files. |

Then I make the include-file to be used in GAME. It will use the above file as source, and looks like this:

|

| Listing up the call address for various functions as well as setting the signatures. |

I do this with any module which has a set of exposed functions. All this ends up in a clean manner - just include a header file if you want to call a function in another module.

The drawback

The drawback with this is that, once you do a two-way reference, you will have to build all or some dependencies twice. The reason for this is that the address of a function is only known after something is built.

Example: If module A contains a call to module B and module B contains a call to module A, and they both have changed, you will first need to build A, then build B and then build A again.

I believe the __banked keyword can fix this, and do builds in one go, but I assume that would also mean huge compilation time for every build.

Modules

The build-script outputs the final sizes when done, and it looks like this:

|

| Build-script would report failure if any of the modules would exceed their allotted space. |

The game module would span page 0 and page 1. startup, sfx, other, buttons, wrldload and game_ext had a one page size limit. orchestr, myassert, stack space and “data” would have to share the space in page 3 below 0xF380.

| Module | Short description |

|---|---|

| startup | Starting up. RAM-search and slot management. FM-search. Pump VRAM full of graphics. |

| game | The main game loop and all ingame functionality. |

| sfx | 3-channel PSG sound effects player and all its effects are stored here. |

| other | Handles most screens in the game and slideshow functionality. |

| buttons | Handles visuals for all "3D"-buttons (responsive buttons), the related menu-markers and the Slot Selection-screen. |

| wrldload | Loads a level. |

| game_ext | Handles boss behavior and offloads game on water animation. |

| orchestrate | Common functions that should be accessible from multiple modules. Inter-segment-calling-functionality. Music replayer. Offloads game with various functions on cloud-parallax, cartridge storage (PREMIUM only), etc. |

| myassert | Normal C-assert functionality. When the asserts fails, the program jumps to screen 0 and prints out the name of the file, the failing function name, and the line number of the failing assert. |

The end-result, 9 modules, was not part of a grand plan. Some of this is just the result of the code growing bigger and out of 16 kB page size and a new module was needed.

The build pipeline

|

| Blue means action in an external tool, red means my own custom script, green is compression, gray is output/reports and white is the resulting file. |

3rd party tools in the pipeline

SDCC

SDCC is the backbone technology used in this project and provides most of what you need to make a basic game. Its ability to easily mix Z80 assembly (including most undocumented Z80 instructions) is great, and you can also learn a lot from seeing the assembly code it generates. This compiler suite includes several different components and one of them is the assembler, sdasz80, which is based on ASXXXX. This assembler has given me a headache more than once, as it often abruptly bails out without telling you what is wrong. It can be really hard to figure out the error. Here are three examples of errors that are easy to accidentally fall into:

|

| Watch out – or burn. |

Never accidentally place (or typically: paste in) a colon after the jump-to-symbol (purple arrow). Never forget a colon after an identifier (orange arrow).

|

| Watch out again – or burn again. |

Never accidentally put the question mark behind the parameter name (red arrow). Note: These are examples and not an exhaustive list.

SDCC went through three major versions during the course of Lilly’s Saga development, and matured a lot during this time. Its latest version 4.2, produced quite compact and efficient code, and I fully recommend it for MSX games development.

Pletter

I’m using Pletter for compression and find it really good. Here are some numbers (bytes):

| File | Dimension | Org size | Plettered | % reduction |

|---|---|---|---|---|

| credits.sc5 * | 256 x 192 pixels | 24 615 | 1 133 | 95% |

| story3.sc5 * | 256 x 144 pixels | 18 471 | 6 816 | 63% |

| outside_castle2(3-4).LTM | 576 x 22 tiles | 12 676 | 1 764 | 86% |

| mountain_road2.AIM | 144 x 22 blocks | 3168 | 162 | 95% |

* Image files which span more than one page (16 kB) are actually split into two files when compressed, so there may be some extra bytes overhead due to this.

MDL - Assembler optimizer

In the pursuit of crushing unneeded cycle spending, I’ve been testing out the MDL-tool from Santiago Ontañón while it was developed. Replacing the instructions on low level proves beneficial but also comes with a risk. Because of this, I did not make the game depending on this tool. However, it is made part of the pipeline and being run on the game-module (only), as this module is where the most of the time critical code resides. I’m grateful for any cycle I can save. To make it work, one just injects it at the right place in the pipeline, using rel-files only as in the following:

- Using sdcc.exe: Make .rel file(s) from your c-file(s) and make sure to use the -c flag (compile only → output is .asm)

- Run MDL on the .s or .asm-file(s) of choice

- Using sdasz80.exe: Make .rel file(s) from all .s/.asm files (NOTE: switch -g must be used on files from point 2)

- Use sdcc.exe to build and link the final binary from these rel-files

The final result for Lilly’s Saga used on a file compiled in SDCC with optimizations turned on, is like this:

|

| Reduction summary. |

Not massive savings for a source file of 9500 lines. The savings were much greater in the beginning, but some of the optimizations/patterns available had to be marked as “sdcc-unsafe” as they involved too high risks. And when SDCC 4.2 came along even less optimizations were caught by MDL due to SDCC producing better code.

At the end of the day, you can always learn a lot about low-level optimizations from the tool, so it is smart to run on your code to reveal the state of it and do actions accordingly.

hex2bin

SDCC’s compiler outputs IHX-files which needs to be converted to actual binary for our system. It seems like most people in the scene have been using this for a good while, and so did I. I have lately learned that SDCC comes with its internal makebin which I ideally would use instead (less different installs in the setup), but last time I checked there was lacking support for "offset", and I see that it is still not working at the time of this writing.

python

python is not really a tool to grab, but a full language with an interpreter and runtime. However, what made things really great was three things worth mentioning:

- pypng package (“import png”) - it makes it so simple to work on image files

- json package (“import json”) - parsing level files is a breeze

- pypy - if you need speed, replace the normal python runtime with pypy. One of my more advanced scripts is 14.3 times faster using pypy. That is 1.4 seconds vs. 20 seconds. Fantastic!

Makefiles

The makefiles on windows are normally quite quirky, and not something I master too well. Luckily there was something called MSX Templates for VisualStudio which I could draw inspiration from and get started in no-time. These have later come with a license and new updates of it can be found at https://github.com/DamnedAngel/MSX-Templates-for-VisualStudio.

Low level drilldown

In this chapter I’m gathering a few random things that can be of interest from a technical point of view.

Music inner workings

In general this player is described in MSX Music (FM) replayer for the tunes in Part II. A few technical details are covered here.

Simple frequency adjustment 50Hz / 60 Hz (PAL/NTSC)

To be able to play the music at “the same” speed in both 50Hz and 60Hz while using the same source data, I used an old well known trick. The trick is to have 50Hz as your master and while this version plays every frame, you have to skip playing anything every 6th frame when in 60Hz. Not at all ideal, but seems to work ok’ish audibly. Visually, what we do is like this:

|

| 50 Hz runs smooth. The jerky 60Hz kicks off a sprint, takes a break and then repeats. The frequencies align at every 100 ms. |

By using this trick and as an approximation we say that NTSC is 20% faster or has 20% more frames. But looking at the real frequencies, the correct percentage is 19.4661%. This means that a long tune will, in real life, take slightly longer time on NTSC than on a PAL, when using this trick. For a 3 minute tune, it will spend 1.34 seconds longer when in NTSC mode.

Loops and jump-ins

In general every tune has these four key property-pairs, which are stored in the source code:

|

| Seg means segment number (for the ROM mapper). Loc is a memory address. |

- Start location

- Loop location (or NULL)

- Jump-in location (or NULL)

- Stub-location (or NULL)

The data stream consists of a stream of value pairs, <regnum> <value>. The regnum is as follows:

|

| Registers and control codes (“fake registers”). |

The replayer’s play routine will loop through the value pairs in a call. When it reaches an End or Wait-command, it returns.

A simplified visualization of the data can be something like this:

|

| Fully fledged tune. Not all tunes have loops and stubs. |

To jump in directly somewhere in a stream, like at the Jump-in-location, we need to know which instruments are supposed to be in active use at that point, and any other data set in the channels/instruments. The replayer supports only one jump-in location (in addition to the loop location), and to start playing there, we need to “play” the stub as the first step, as the stub sets up all the registers correctly for this location.

Playing the stub is just a series with reg-writes with no waits. Once that is done, the replayer data pointer is set to point to the jump-in location, and all is hunkydory.

The loop address is less sophisticated. If this address is not NULL when we reach end (0x3A), the replayer data pointer is set to the loop location bluntly. The instruments at the end of the tune just have to match the instruments at the loop location for this to sound correct (as is the case if you use looping in MoonBlaster).

Fade support

I found that the volume does not change much in the tunes for this game, so rather than keeping track of the current volume in each channel used at runtime, and wasting lots of cycles on that, I’d parse the data up front in the vgm2fmr-tool and make special blocks which informs the replayer about any changes to the volume. Ok, a tad bigger file. This way the replayer has a RAM-copy of the current volume for each channel at any given time.

See illustration in previous chapter. At every red line, some volume info is efficiently stored in memory. Following the file format, checking for 0x39 is only done once per play call and is done at the start of a frame to avoid wasting cycles in the inner loop of the replayer.

In case the gamecode triggers a fade down, the current volume for each channel is known, and a “volume-1” is set per channel in a small number of frames until volume has reached zero.

The fade down must be quick, because a shortcut is made in the code: During a fade-down, the replayer excludes changes to the volume if that is streaming in normally from the data in registers 0x00-0x38. Should sound reasonable enough as volume is handled separately using its own 0x39-block. But the complication here is that volume and instrument are set by the same value in the registers (sharing the same byte, just different bits). The result from the exclusion is that an instrument will not change during fade down. If a change in instrument actually is “by chance” ignored in a real situation, an artifact could possibly be heard, but I have not heard any complaints. I just made the fade down a bit fast to reduce the risk of this happening.

The inner loop and data storage

The data-format is just a series of value pairs. A quick spot at the control codes will suggest that we put all waits in one byte instead of two. As waits are a number of frames to wait, and this is often a low number, waits could be identified by “if regnum>0x3B”, where n frames to wait would be given by “regnum - 0x3B”. This would save many bytes per tune.

However, having data pairs, means that the data will perfectly fit the 16 kB segments, which in general makes the tool code and replayer code simpler as you always know that an even address is a regnum. Your replayer code for moving across segments becomes easier as well, and your tool does not need to do any special edge-handling.

In the inner loop below, the wait-requirement of 84 cycles is respected perfectly. Given that counting from the start of an out to the start of the next out, is the correct way to count. It will detect page boundary-overflow within these cycles. If you need to swap segments, which is very seldom, it will cost you 83 cycles extra during that iteration.

|

| Data stream is pointed to by HL. Data is located at 0x4000-0x7FFF. For simplicity, “new_ctrl_code” and “fixMem” (a macro) are not included. |

Handling trouble outside my control

During the time when I trimmed the inner loop down to 84 cycles, I found that the openMSX17 emulator didn’t produce correct audio. It worked with 85 cycles, not 84. I felt that the situation was a bit like “FM emulation has been around for years and all bugs should be found by others already”. Turns out, there was a bug in openMSX17. Fixed (by a workaround as far as I understand) in openMSX18. Make sure you use that version or higher.

I also found artifacts in the hardware of the YM2413 chip, related to the use of rhythm instruments. Once again, it should have been a known issue after all these years. As Grauw points out:

“But either way it’s definitely wrong, and surprising that nobody has noticed this after so many years of use.”

The issue can happen in a tune where rhythm instruments are (to be) used. The problem is that, for channels that are going to use a rhythm instrument (later) in the tune, you get a cymbal-like sound played at the very beginning even if there is no cymbal or drum playing. MoonBlaster (which is the base of our vgm-streams) sets up instruments and volume in the first frame just to prepare a channel for later playing (playing: KEY_ON and KEY_OFF controls the actual audible sound output). Grauw found out the reason for the audible artifact and it is related to the inner workings of the OPLL where resetting all registers to 0 does nothing to clear the internal state of the envelope generator, which in turn "suddenly" produces sustained sound while in KEY_OFF. More details on MRC.

Ok, so in practice, the artifact could happen in four of the tunes in the game, so I had to think of a workaround. I chose to analyze the data stream when producing the fmr-file. For suspect channels the tool manipulates the data stream to make sure that the volume is set to minimum all until the channel is actually used for the first time.

Hard won knowledge

During the development there was a lot of learning. That the bits in a byte in register are shared for several different purposes is fine for a real player which calculates things at runtime, but can be problematic when we bluntly just send back previously recorded values. Especially in loop–situations. See this example of the SUS-instruction (“sustain” - gives a lingering sound on the channel):

In this case your composer is used to, when the tune ends, it will continue at the loop-point and the current playing tone (“Y”) will get a nice sustain effect. However, as the replayer is just repositioning the memory pointer to what was recorded last time, it will feed the registers with the data from the first (only) recording, where octave and frequency is also sent to the chip (as part of the same byte value). In practice, you will get tone = X, or something related to X, instead of Y, which really was your intention.

We also find that the first frame is excessively costly, as all registers are set up in this frame. If the first frame can be run outside the normal game-loop, this is not a problem. And if you have looping music, you should avoid just restarting the tune, try to find a loop-point after frame 0, if cpu-spikes are problematic for your game.

The object structure

When you come from modern programming you will be used to object oriented languages, inheritance, polymorphism, etc. In the game I have all kinds of objects and they have various properties. Instead of having a one-size-fits-all structure for the various object types, I have a basic structure which is included as the first part of all other “subclasses” or sub-structures, mimicking inheritance.

|

| All ingame-objects share these first members. |

|

| Examples of how the various structures use the same first members. |

This way the game uses StdObjectHeader* (a pointer to an StdObjectHeader-object) throughout. Like, the LUT or the ticklist both hold such pointers. We can then cast to the specific struct if needed. Normally I would base such a cast on the nObjectTypeId member, which has values that come from a set of defines.

Sprites and enemies

The sprite structure

The sprite structure is one-size-fits-all and is less elegant than those objects above. Nothing special here, maybe except one thing, the pTickFnc.

| Bigger size than we’d like, that’s for sure. |

| The uSpriteTypeId would be any of the following. |

The uLifeSpan member is in practice not in use in the final version. I used to have sprites that vary in what happened when killed (or when a bullet reached outside of the screen). Like, removal from the current list, or not. Or even delete from the heap. In the end any sprite is just marked as killed and placed far off screen and can be reused easily if needed, as nothing is deleted from memory.

pTickFnc - pointer to the tick-function

One of C’s nice features is that you have pointers to functions. So, during game loop execution when iterating through the list of active sprites, I would call their corresponding tick function which is stored and prepared at level start-up, in pTickFnc (see also No dynamic object creation during gameplay). This gives a constant access time to the function. The common alternative is to have a long sequence of ifs on a variable to determine which behavior-function that should be used. In this game, that would include 20 or more checks.

Optimal list ordering in sprite tick and sleep list at level start

What is the optimal ordering in these lists? Actually, having two global lists for the whole level is probably not the best solution if there are many objects added to the list. Luckily, we end up with some decent numbers. As we can see in the level overview in make_level.py the maximum amount of enemies is 32. Then add some other sprites like debris, floating numbers, halo, crate and tree-trunk, and it totals 40. In a worst case, traversing the sleeplist until the end would take 40/4 = 10 frames. As sprites are awakened outside the viewport in horizontal direction, sprites will be visible “in time” if the player is running full speed (2 pixels per frame). In vertical direction on the other hand, we risk that they are awakened first when they are already inside the viewport. And so on…

When the level loads, the sprites are added to the sprite ticklist one and one. The order is dependent on how they are created (i.e given a new id) in Tiled. Normally I have created the levels from start to end, so the ids are sequenced according to their placement and normal level progression. For 10 sprites, the ticklist would be something like this:

SpriteTicklist: 0, 1, 2, 3, 4, 5, 6, 7, 8, 9

At level start I traverse the ticklist and move non-visible elements/sprites into the sleeplist in the order found below. We assume here that all sprites are outside visible area and should be sleeping:

Sleeplist: 9, 8, 7, 6, 5, 4, 3, 2, 1, 0

I did this to avoid getting too many “holes” in the list, as the gameplay progressed. 0 would be awakened first, then 1, and so on. Final words: this works for a small amount of elements, and by chance how my ids was created, so there must be smarter ways to handle this properly.

Area system

I was unsure whether I would include this here or not, as it is a bit clunky to explain reasonably well, and I barely remember how I made it. But it should be mentioned as it ended up being very efficient doing boundary checking. It is using 8 bit values only and has the ability to check for both min and max in one byte-comparison only(!) This is made possible using the idea of a signed char on the byte.

Please recap on the outcome of the 64x32 grid system in Sleeping sprites via sprite-ticklist and the Area system before trying to grasp the garble ahead.

I found the following old drawing in one of my working sheets, which attempts to explain how the grid works.

|

| The inner green box is the current calculated “inside”-area given the current viewport. |

The values for the buffers (length of purple and blue length indicators) in the game are as follows:

#define SLEEP_AREA_FORWARD_BUFFER_X 6

#define SLEEP_AREA_BACKWARD_BUFFER_Y 3

#define SLEEP_AREA_FORWARD_BUFFER_Y 6

“ORG” is set to be in pos (2,2) in this coordinate system. So, any runtime calculation would use the area coordinate from the viewport, upper left, and just add (2,2). From this we can deduce that this boundary box is always greater than the current viewport on the x-axis, but in y-direction the system just covers the same as what is visible. The light green cells indicate this range in the drawing above.

The following two sprite struct members are relevant:

signed char area_y;

Any sprite, when put to sleep, has its location in area-coordinates stored, like this:

p->area_y = ( signed char )( p->y >> 5 ); // div by 32.

16 bit shift 5 and shift 6 can be done very efficiently. Note that it is often problematic to use bitshift on signed values, but in this particular case/game it works. Enemy sprites in alive state will never have negative coordinates.

Hence, traversing all and checking whether any of the sleeping sprites are returning true in both directions in the above formula (see image) is quite efficient.

Points system

The points system is simply Binary Coded Decimals, BCD.

Actually, I started out with a custom system, but this was not as flexible as BCD. Now that I got the hang of it, I assume I’ll use this in all my upcoming games. Get the basics from Keith’s video on youtube.

Inside parallax

The fundamental idea for the parallax is that we change tile patterns only. The backbuffer in the level is not manipulated at runtime.

We scroll the parallax slower than the current tile scroll which is 2 pixels per frame. So, 1 pixel per frame, per 2nd frame or so.

In the initial version, I used 8 upper tiles and 8 lower tiles. The version updated 16*8=128 pattern bytes in the VDP at every frame (static colors). All these bytes were precomputed and supported any offset of the cloud within these 8 tiles (=8x8 pixels = 64 different positions). The space storage for this would be 64*128 = 8192 bytes. Half page. This was easily sacrificed with the help from megarom storage. With an OUTI cost of 18, just pumping these up to the VDP was 2304 cycles (or 2560 on those slow VDPs). This was an ok cost for a parallax effect, which I really wanted in the game. It was also very flexible as it supported any offset of the cloud at any given time, and any previous looks of any of the tiles would be overwritten and look correct.

However, a span of only 8 tiles in the horizontal direction meant that the scrolling of clouds would be quite close to the speed of the rest of the level moving speed (normally 2 pixels/frame), rendering the parallax effect barely visible. User testing confirmed my suspicions. I had to expand it. A brute doubling of the amount to 16 tiles in horizontal direction gave a cost of around 5500 cycles. Too much. In addition, pre-calculation of the bytes would cost me 16384 bytes – a full page or a full ASCII16-segment. This started to look fishy, so I needed to rethink the algorithm.

The new (and final) algorithm works within a bounding box of 16 tiles horizontally (=32 using two lines), but as the cloud pattern itself is only spanning 5 tiles (i.e. 10 using both lines) I use this fact to exclude updates on all the other tiles that are not occupied by the actual cloud patterns.

There are eight variants of the cloud that are stored in rom, and they look like this:

|

| Showing 2.5 cloud variants. Note that the rightmost tiles have all blue pixels. On the last variant (“7”), the leftmost (upper and lower) are all blue too. |

This means that instead of updating VDP for 32 tiles, I update for 10 only → making cost 10*8*18 cycles = 1440 cycles. That is OUTIs only –bear in mind that there is other code to be executed too. The storage cost in ROM for the patterns would be 8*10*8 = 640 bytes. Compare this to the above numbers.

Which tiles to get the cloud-patterns copied in is dependent on the viewport location. The upper left corner of the cloud will be placed at an offset in the range [0,127], i.e the range of 16*8 pixels.

|

| “Tile window”: Only 10 of 32 tiles have their patterns updated in a frame. Which tiles, is depending on the viewport position. |

A drawback with this solution is that that screen cannot scroll faster than 2 pixels at the time, because there is currently no specific code to clear the previous tile patterns in the VDP. We are depending on that we are scrolling at normal pace, and having the leftmost source patterns (which are all blue) being used in the last frame before moving the “green window” above to the right. Likewise, scrolling to the left, we need the all-blue-pattern at rightmost positions (as in pattern variant marked “0” above) to be used before moving the green window left. If we fail to get these all-blue patterns in the outermost tiles before moving the window, we end up with white remnants on the screen. Adding “blank” tiles on each side (=4 more tiles to fill with data) would remedy this (~600 cycles), and would allow for greater scrolling speed.

To illustrate the workings of the current algorithm, we can replace the tiles with something we can easily recognise. Like this:

|

| Clouds have 16 upper tiles upper and 16 lower tiles. |

Next, the cloud-object or the “cloud boundary” is placed out in the level like shown below. The solution is based on the placement being at every 4th tile only. This is connected to the speed of the level which is 2 pixels per frame and the parallax is moving only every 2nd frame. So, ¼ of the speed of the forefront scroll.

|

| The vertical guides are there to help me place out the clouds correctly. |

|

| The location from Tiled in the previous image, is here shown ingame. |

The make_level script takes the boundary for each cloud from tiled, and replaces the background-tiles with the 2*16 parallax-tiles. As we can see in the image above, the starting tile is not always 0 or ending on 0xF. The script adjusts according to the location, so that it looks correct when the viewport is covering this area. I do all these calculations to to avoid getting a cloud-object ending up looking like this on the screen:

|

| Cloud patterns actually wrap around between parallax tile 0x0 and 0xF to support the algorithm. A wrap is called a “cloud_split”. |

The bigger image above is what the tiles look like if the offset is around 104, and we look at the tiles organized like this:

|

| If there is no offset, the tiles would also be shown in this “default” order. |

Codewise, this means that for all offsets from 0 to 87 we can just copy the cloud patterns in one go (per line) from ROM and onto the VDP. For greater offsets, the cloud is split into two chunks, and we have a two-step operation.

The algorithm at runtime then becomes something like this:

This idea should be pretty straight forward. But to make this as fast as possible, I wanted to pre-calculate all the parameters in the algorithm above, ensuring next-to-no calculations at runtime. So I set up a spreadsheet in Google Sheets with some simple formulas to do that.

|

| Just a glimpse of the sheet - helping visualizations as well as calculations for dest, src and num_bytes. |

Due to performance reasons I needed to align data to 16 bytes chunks, hence there are 3 bytes wasted per offset (or “record” in db-terms). With 128 different chunks, the storage for this is 128*16 = 2048 bytes. This makes the total ROM-storage for this parallax algorithm: 2688 bytes.

It would probably look even better if we could have made the clouds slowly scroll across a 32 tiles wide boundary instead of 16. That would either demand 64 tiles set aside for parallax or imply changing the backbuffer runtime at every place where a cloud is located. The latter would most likely reduce the total needed tiles to 10 but require a more advanced algorithm and be quite costly. And both would complicate the level design as clouds/cloud-boundaries would collide with other things to a greater degree.

Transitions

Similar to the autopilot mentioned in Input modes in part II I also use a state machine for various (visual) transitions in the game. As there are several things that should happen in a specific sequence and over several frames. At the start of every frame I call transitionFrame(), and it will act (or not) based on the current g_uTransitionFrameCode. Many transitions will do something with the palette or the full screen, and hence you want to do this at the beginning of the frame. Otherwise it will potentially occur when the raster is at the middle of the screen and you will get visual artifacts stemming from that (tearing and similar).

Examples are:

- when warping (make screen black when changing room/level, and turn on again)

- start of fade-up

- hiding screen when losing a life

- boss lightning effect (alternate between black and white for the color index which normally holds black).

Error checking

I try to do as much error checking up front when making the data (level creation and similar) and during loading a level, as ingame checks may be too costly. Still there are many places outside the tightest loops where I do some extra checks. Hence I built an assert-module which would print out information when it failed.

|

| Asserts are very handy during development. |

The code included from the asserts can be easily removed with setting a define (#define DEBUG 0), handy for release-builds.

Furthermore, the startup sequence checks that the user has MSX2 as minimum, 128kB VRAM as well as looks for FM-PAC.

Low level optimizations

See also structural opts in Part II. In these chapters I’m going to visit a few topics or ways of doing things which I have been using and, from what I can gather, not being too well known or common practice.

I admit these are random picks, but it was never the intention to write down every trick in the book. Besides, I am pretty blind to my own coding habits, so it becomes hard to judge what is “newsworthy” after a while.

SDCC

There are several things one should know about SDCC when you want high performing code. When in doubt about what is going on, take a look at the generated .asm-file that is output alongside your other files.

Make sure you have v4.2 or higher as many optimizations came with this version. Most notably the default calling convention was greatly improved (__sdcccall(1)). Code size was reduced as well. Pre-v4.2 I was also using the __z88dk_callee keyword quite a bit. It moves the cleanup of the stack to the callee, which often saves a lot of cycles. This was, for the most part, made default in v4.2, so this trick is only needed in some places where one is still using the old calling convention, __sdcccall(0). If you must use the old calling convention, consider stranger ways of dealing with return to save cycles, example:

|

| Quick grab of the 16-bit parameter into DE and a quick return. –Will confuse the profiler script in openMSX though. |

| Parameter into DE - the way “google told me” to do it (conventionally). |

The “boilerplate” for the upper routine is 33 cycles. The other, more conventional one, is 86 cycles (can be made 57 cycles if using hl instead of iy, but still…). With the new calling convention the parameter will already be in hl, so the cost is ret only, which is 11 cycles. All this is not counting whatever SDCC needs to do up front to arrange for the passing of values of course.

Compile with optimization

SDCC has a few command line parameters that can be passed on to make it generate more optimal code. I have some values I have used since v3.9 which comes from an old CPC wiki source: From what I read the values sent in these parameters do not work exactly as they used to. I’m not sure what the right values are these days, but I’m still using the same values and really good results are achieved.

--opt-code-speed --max-allocs-per-node 2000000

Quite often the result is less use of index registers. I only use this in the final versions, as this takes the build of the game module from ~1 minute to ~25 on my 8-core AMD Ryzen 7 4800U 1.80 GHz. Sadly, SDCC does not utilize multi core on compilation.

Globals are good

As storing and reading values in plain ram is very fast, globals can be recommended – contrary to common wisdom wrt to readability, maintainability and error proneness. Local variables on the other hand are slow and thus not recommended in places where speed is important. They are stored on the stack and are handled by SDCC using index registers as long as you have more than a few variables in the function. No or very few parameters and local variables can make SDCC stray away from the index registers, so I have still managed to achieve effective code generation using that in a few places.

Index registers

The slowest registers of them all, IX and IY, are used a lot by SDCC, albeit less in 4.2 compared to older versions. Sometimes index registers are faster than the equivalent code using HL and others, but mostly not. Normally not in the way SDCC uses it. Just be aware. I typically check the generated code in important parts of the code and, sometimes, replace the code with asm.

The IX-register is always reserved as SDCC uses this, i.e. this is the last register you want to use. If you use it, you must push and pop, which is 33 “MSX cycles” alone.

__preserves_regs keyword

Use __preserves_regs. It gives hints to the compiler so it can avoid pushing and popping registers when calling your function. I’m using it a few places.

__naked keyword

You can use this when you want inline functions and want to instruct SDCC not to create prologue and epilogue, which may be costly at times (you can’t really know up front. Can be a bit risky, but good if you know what you are doing. You even have to insert ret yourself. Implicitly, this also means “__preserves_regs(all)”. I use it a few places, like here:

|

| Running naked in a field of flowers. |

Amending asm generated from C

Another trick I use from time to time: If I’m pretty ok with some code generated by SDCC I can compile with full optimization and then further optimize the most obvious stuff by hand and just copy the code into an assembly source file. Like a hybrid. Can be used in those cases where the function has no changes coming in the time ahead.

VDP - tricks

Ports and ignorance

As mentioned in Dedicated ISR, no H.TIMI or BIOS usage in Part II, Lilly’s Saga does not support external VDP. This decision was mostly based on all the code examples out there which use the standard ports directly. There is not too much gain using hardcoded port numbers, particularly not when I’m using OUTI. Which for this game is ended in 95% of the "calls"). In retrospect I should probably have supported external VDPs. Worth mentioning, as there will be lots of examples from the source code in the chapters below where hardcoded port numbers are used.

Combining color and pattern tables for block 1, 2, 3

… in screen 4. If it wasn’t for this trick, which I accidentally stumbled over at MRC, I would (as an example) have to update three blocks with the six tiles instead of only one time. And the same with the color information. Three times instead of one.

|

| Expensive? The bump uses six tiles which changes both pattern and color. |

According to the technical handbook, we see that the screen is divided into three blocks:

|

| From Konamiman’s pages: Three blocks for the patterns in screen 4 (and 2). |

Being able to update one block only and have this affect the upper, middle and lower part of the screen is desirable. Likely for 95% of all games. It is only ⅓ of the job, obviously. Turns out that this undocumented trick can be used on many, but not all, VDPs for MSX1 in screen 2. For MSX2 however, all known VDPs support this trick and you should be good to go.

Unrolling the OUTs and OUTIs - watching the speed limits

As I have a megarom at hand, space is less of a concern and I use unrolling at every place I can. Unrolling is well described here.

|

| Example of two routines with heavy unrolling in the game. |

You just need to be aware of the speed limits. These are not really documented officially I believe, but we’ve started getting some good numbers via aoineko’s VATT tool which succeeds the numbers on the MAP website. Key for me using screen 4 is that the limit is always 15 cycles, regardless of whether screen or sprites are turned on or not. 15 is fine for OUTI, but you cannot unroll OUTs to clear off a part of the screen, that would end up too fast for the VDP.

Precalculating the VRAM address

Not always, but in many cases, you write to the same VRAM address every frame. There is really no need to calculate the bytes that are to be passed on to the registers every time. The typical routine calculating the values to be sent to the registers are normally something like below (case A).

|

| Case A: Setting a write address in the VDP costs you 124 cycles (not counting call+ret and putting the address in HL). |

If this was done with four precalculated values, it would be done like this (case B):

|

| Case B: To compare with the above we ignore the cost of setting the HL-value, cost: cycles 80. |

Advancing the VRAM pointer with “in a, (0x98)”

Not too much to gain here, but if you want to steal a cycle at every spot you can, you can utilize the IN-operation for advancing the VRAM-pointer. In the example below I have a RAM-copy of the SAT where I want to iterate over 4 bytes, but only update byte 0 and 1 in the VDP. With aligned memory and a manual increment of l, there is 2 cycles saved for each run in the following code snippet(macro) compared to the original use of just OUTIs:

|

| Using “inc l, in a, (0x98)” (17 cycles) instead of OUTI (18 cycles) to advance internal VDP VRAM pointer. |

The game uses the particular code above for the 8 border masking sprites, and does that three times (only 7 sprites in the last run) on the interrupt. The remaining 24 sprites update byte 0, 1 and 2, so only 1 cycle is saved per sprite using this trick. In total, during a frame, the total saving is 2*8*3-1+24 = 71 cycles.

We could have been tempted to not have a full copy of the SAT, and only keep copies of y, x in RAM and thus avoid the inc l, and just advance the internal VDP VRAM pointer using in in a,(0x98), but this would be too fast when unrolling (12 cycles vs 15).

No need to always update VDP register #14

Register #14 holds the upper bits, 14 to 16, i.e. it will hold the value in the range [0-7]. In practice, this is the index of the 16 kB block in use. For screen 4, this is the same as the VRAM-page. Which means that you can save 32 cycles in case A and 36 cycles in case B above, if you just ignore (over-)writing this value.

Here is an example of writing the score and coins in the game which takes (only) 358 cycles. The precomputed 17-bit VRAM addresses are stored in four bytes (“quad”) for both points and coins, but in the second case, only two of these bytes are used.

|

| Yellow parts show that 4 bytes are needed for addressing at the start (to set the correct page), while in the subsequent addressing we only need 2 bytes. |

In retrospect and totally missing the point we are demonstrating here, we see that we could have saved some further cycles by just advancing the VRAM pointer 3 times, instead of setting it directly (ref. previous chapter). To do that you would replace the instructions marked in pink with in a,(0x98) / nop / a,(0x98) / nop / a,(0x98) / nop. This would remove 9 more cycles.

Collision and why not use VDP collision detection?

Our VDP has some kind of sprite detection. From what I can read from the manual, the support seems insufficient even for a simple game. It detects a collision (pixel precise), and if there is one, it can give you the coordinates. But if you have several collisions occurring at the same time (and say, some which should not give any attention, while others should), the support isn’t there. At least from what I could see.

I needed collision detection between some of the sprites, mainly the player character and the enemies. The player character consists of two sprites as do the enemies as well. The game was simplified so, any enemy-enemy collision was ignored.

I had to go for checking boundary-box intersections. All enemies have the same size –the same size as the player. Most enemies generally fill the 16x16 space assigned to them. I made the collision rectangle 14x14 (1 px inset from each side). All positions in the game are stored as 16-bit, and 16-bit rectangle intersection checks was something I wanted to avoid. Because I always kept a copy of the SAT in RAM, I made an algorithm that worked on the 8-bit values in screen space (/SAT) instead. One axis intersection test cost only 31/32 cycles. The whole routine ended up super-fast.

From the SAT setup in Part II, we know that the enemies start on sprite #20 and that they each use two sprites. The rental system would tell me if a slot is in use or not. At maximum there would be six 8-bit rectangle tests. Very often the first check on Y would fail anyway, as the game is designed to have very few enemies on the same line (maximum two).

Z80

In general, working with opcodes and optimization gives you a good grasp of the cost of things after a while. I certainly matured quite a bit over the course of this project. Get to know those costs! 🙂

Shadow registers

The shadow registers are 3 pairs, BC’, DE’, HL’ which comes as a group, and in addition we have the AF’ which in practice is an 8-bit register. The shadow registers are there for you to use. No one else is using them, at least not when you are making a cartridge and all code is handled by yourself. I use it in several places where I otherwise would run out of registers. Typically when you have an outer loop with its own bookkeeping, and an inner part which needs its own registers.

I-register

It was quite late that I learned that I could also use yet another register. The I-register is not used for anything particular in IM 1 (only) and can therefore be used as you wish. The only issue is that it does not have much functionality and is more costly than other 8-bit registers. It’s best used for storage when you run out of registers. Here is a cost comparison in “MSX” cycles:

ld i,a : 11 cycles versus ld b,a : 5 cycles

Note the “hidden” property of the ld a,i instruction: It sets the flags (S and Z) according to the value. You do not need to compare the value with CP or OR (saving at least 5 cycles). If you want to do a three-way split (negative, 0 or positive), we can simply:

|

| Sometimes you can omit that OR A for testing the value. I used this in the game. |

Memory alignment

Memory alignment is about how data is stored in memory. Data alignment involves placing data at memory addresses that are multiples of a certain value, often dictated by the size of the data being stored.

I will typically make data sizes a factor of two in those cases where we need to be able to quickly calculate the location of an object with index n. Not a factor of two will be more costly to compute.

Along the same lines, if I have an array of, at most, the maximum value of a byte (=0x100), i.e. 0x100 bytes, it would be great to place it at an address that is a multiple of 0x100. Because that would mean that the MSB of the address is constant, and that the location can be expressed as a 16-bit offset + an 8-bit location. All calculations of the location within the array can be done with one single 8-bit register. Which is fast.

Unfortunately SDCC v4.2 does not have any alignment mechanisms that I know of, so it’s a bit clunky to do. I have defined my arrays via asm. I could have benefitted from using it more than I have, but at least it is used three places:

- SAT RAM copy, array size 0x80 (aligned to 0x80)

- Tile collision codes, array size 0x100 (aligned to 0x100)

- Object LUT, array size 0x40 (aligned to 0x100)

Example: When the collision codes are placed at 0xBE00, I find the address in HL of any given tile_id by:

|

| Finding the address is of “no cost” with aligning. |

Undocumented instructions

Around 2020, I became aware that there are some additional instructions on the Z80 that could be used. The warning was that, as these are undocumented, they may not be implemented on the target hardware. It will be my decision to use it or not. I’m not a Z80 idealist who will stick to the original forever. The undocumented instructions are in all MSX Z80s known, and most of them are even in the R800 as well. Of course I wanted to use them in Lilly’s Saga. The papers from Sean Young can be found here: https://www.myquest.nl/z80undocumented/ but I mostly used a short list page to just get a quick overview of them, or I often just browsed the interactive Z80 / R800 instruction set at MAP . However, for the last link, you cannot easily see if an instruction is official or not.

The instructions I found interesting are the ones which break up the index registers into plain 8-bit registers and let you do normal ld, add, adc, sub, sbc, xor, or, and, cp, inc, dec with them. They are called iyh (high byte) and iyl (low byte). Like ld a,iyh. In general, all of these instructions are a bit more expensive than your normal register use, just because they involve index registers (where the byte size of the opcodes are longer).

The reason for me to use these undocumented instructions is that I sometimes run out of registers. For example, you need to store away an 8-bit value and you cannot use shadow registers. Here is a cost comparison in “MSX” cycles, using IYh as an example:

|

| Note: push/pop uses two registers. |

It wasn’t strictly necessary, but I have also used the SLL instruction (see bit shifting below). It is also dubbed SL1 as “Shift Left Logical” is not a 100% correct name. It is not documented on the MAP-link. Not sure why, but probably because this instruction isn’t included in future generations, like R800 or Z380.

During the first stages of the development on this project, undocumented instructions were not supported in SDCC/sdasz80, and you would get errors. When that happens you can create macros instead, using the opcodes directly, like this:

|

| Example of how you can use “ld a, iyl” or “ld a, iyh” when it is not directly supported by your assembler. |

These days, you can enable support for this in your assembly files with this at the top:

.allow_undocumented

To allow SDCC to use undocumented instructions in the code it generates, you need to use this command line parameter:

--allow-undocumented-instructions

At the time of writing, the above requires SDCC v4.3 with a later snapshot build.

SP register “abuse”

This is one of the most powerful tricks you can use. It means setting aside normal stack-based programming, and using the SP-register for plain transfer of two bytes at the time. The fundamental idea is that, given you have a pointer to a byte in memory, then (using HL as an example register pair) POP HL are the fastest way to (both):

- Load (“ld”) two bytes into a register pair, like LD HL,(SP)

- Make your pointing register (SP) point to the next pair of data, like ADD SP,2

All this in just 11 cycles. The corresponding PUSH is 12 cycles.

I use this in several places in the code. It comes with a few drawbacks. It takes some cycles to set up correctly, you must have interrupts disabled all the time, and you cannot use calls. PUSH and POP commands are obviously used for something different than in normal scenarios.

The prologue and epilogue, using IY to store/restore the original SP-value, is like this:

|

| You need to store away the original SP-value. Using a register is the fastest way. |

The above overhead will add 45 cycles to your execution. If not running on the interrupt already, you might have to add DI+EI too which is 5+5 additional cycles. Hence, if this trick should be used, the gain must otherwise be greater than the overhead, as well as having the other drawbacks work well too. Especially the requirement for DI can be tricky in games where interrupts must happen at specific raster lines.

Almost always, avoid index registers

Index registers are awesome for making the code cleaner, but they are very expensive to use, normally. However, there are situations where index registers can be the faster alternative. I’ve typically experienced this when you have code that works on (data structure-) “records”. In the parallax code, I was unable to make a version with the normal registers that were faster than the index-register version. The moving-platform routine is another example, but in that case it was more of a case where I was running out of registers.

Below are some comparisons using “MSX cycles”:

When you get some of this in time critical routines and/or an inner loop, things get out of hand quite quickly.

And by the way, jp (hl) is really jp hl. Same with the index registers. Weird.

Bit shifting

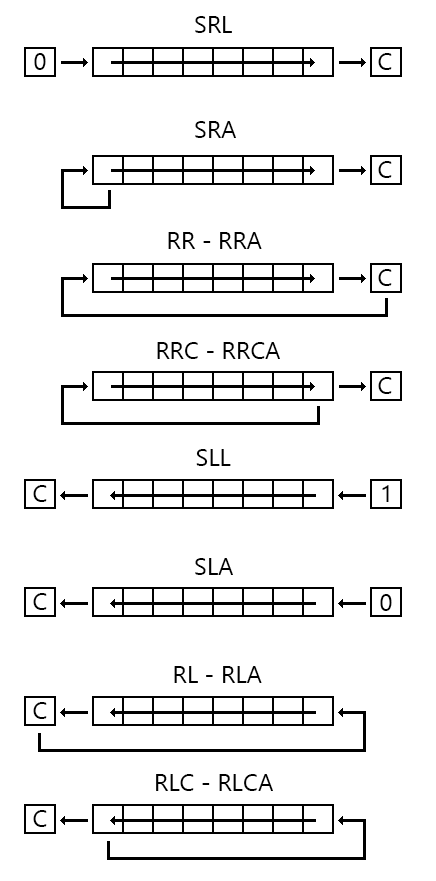

I use all kinds of 8 bit and 16 bit shifting in the code. It’s not hard to deduct the fastest possible ways of doing this by studying the cost. For example RLA is 5 cycles and RL A is 10 cycles (only some flags differ). I’ve always had this wikimedia commons image bookmarked for quick reference:

|

| Édouard BERGÉ, CC BY-SA 4.0, via Wikimedia Commons. |

Also, I’ve been using this nice collection of 16-bit shifts on the z80 over at chilliant. Recommended.

Fastest 8-bit to 16-bit unsigned addition

When memory alignment is not possible. – I’ve been using this way of adding 8-bit values to 16-bit lots of places in the code. After trying out many different variants, I stumbled across a short comment by Metalion on MRC:

Given a 16 bit addend in HL and a 8-bit addend in A:

ld l,a

jr nc,$+3

inc h

This one takes only 23 cycles – and the beauty of it is that, despite there being a conditional it will always use 23 cycles.

Precalculate as much as possible

The game is filled with lots of pre-calculated data which are put in ROM. Also known as Look Up Tables (LUT). I typically used normal C, or Google sheets or python to generate the data.

|

| KISS - Keep It Simple Stupid. Divide by 22 in ROM. |

Here are some examples of where it was used:

- Divide by 22: Each screen is 22 lines. Given a Y-pos I need to find which screen we are in to set the correct ticklist.

- Palette and intermediate fade-step palettes.

- Parallax data.

- Player and enemy sprite movement curves when killed.

- Bricks explode sprite movement path.

- Screenshake Y-offset.

- Lots of others.

|

| Pre-computed parallax data which also is aligned to 8 words = 16 bytes. |

Pre-calculations is also done when a level is loaded and the results are put in RAM. Typically I need to find a location in the level data based on the tile-y-position, and the offset for this is Y_POS * NUM_TILES_IN_X_DIR_IN_LEVEL. Similar for the AIM. It makes sense to avoid spending time on multiplications ingame at 60 Hz.

Favor HL over DE (and BC when you can)

HL has a few operations which the other normal register pairs do not have. Like 16-bit addition and subtraction is only handled by HL. Single, non-A registers may get its value from the memory address of hl, like ld c,(hl), as well as some other special instructions. Equivalents do not exist for de or bc. Furthermore, HL is sometimes faster than the equivalent of DE and BC:

ld (nnnn),hl : 16 cycles versus ld (nnnn),bc : 22 cycles

Reading and writing to static RAM addresses using register pairs instead of single registers

Given that you need to write two bytes to RAM (or read), there are likely situations where the fastest way to do this is to use the instructions that use a register pair BC/DE/HL. Especially when the pairs to write are not sequential and not plain data-transfer (then we use LDI).

These paired instructions work on static addresses only:

ld hl,(nnnn) : 16 cycles

ld bc,(nnnn) : 22 cycles

ld de,(nnnn) : 22 cycles

ld (nnnn),a : 14 cycles

ld (nnnn),hl : 16 cycles

ld (nnnn),bc : 22 cycles

ld (nnnn),de : 22 cycles

Using a register pair you get either 8 or 11 cycles as cost per byte for a read or a write. As a comparison if you would write via addressing in a register pair (ld (hl),a), it would cost much more as you would have to set and increase or change the address in various ways.

I’ve used this in many ways where I manipulate the SAT-copy in RAM.

|

| HL points to an array of Y-positions for the SAT (➔ C and E). X-values (i.e. B and D) are static for every write. Each LD-instruction writes in steps of 4 bytes. |

It’s not very elegant, but it is fast. The code in the image above costs 289 cycles, and updates x and y pos for 8 blocking mask sprites. 62 cycles is outside the image and is used to set the HL-address. Result is that placing 8 blocking sprites in RAM at the start of the frame costs only 362 cycles (including ret).

Favor 8-bit calculations over 16-bit

Given that the result can be in 8 bits, that is. It should be quite obvious that an 8-bit cpu is faster working on one byte instead of two, but it can be easy to forget sometimes, especially if you work in C and not in assembly. The Area system is a case where I strive to get into 8 bits as well as the sprite collision routine which also is focused on 8-bit instead of using the original 16-bit positions.

JP vs JR

jp nnnn is faster than jr nn - it just takes 3 bytes instead of 2. Unless the exact size of the operand is of any matter, you can blindly change from plain jr to jp, but if there is a conditional involved, like jr z, nn, you need to carefully consider if you want to do the change. With a condition involved, the jr can often be faster. I’ve been trying to be conscious about this throughout time critical parts.

Jump-tables (jr) and self-modifying code

Self-modifying code involves replacing bytes/codes at runtime in the code part of your program. Thus, this needs to be done on code running in RAM only and not ROM, and you need to know what the opcodes and operands look like. I knew I wanted to test out this trick as it looked intriguing, however, I couldn’t gain too much from it in this game, so I ended up only using it in one place only.

Expensive loops were the target, and sceneTick() was an obvious candidate. My initial code in that function was something like below.

|

| Iterating through a list, and if visible, do a switch/case. |

A switch/case is just a series with comparisons under the hood. So, when the number of cases in the switch block becomes too many, the last comparisons will take a long time. It will make the execution time (potentially) vary a lot too, which always is undesirable.

I made an optimized asm-variant of the code above, and the time to enter execution of each TypeId varied between 35 and 88 cycles with an average around 60. Not insane numbers, but by using jump-tables/self-modifying code, I got it to a constant 45 cycles (ok, the last one is actually 43 cycles).

In the code above we could also have used JP, but it did not pay off this time when all my functions could be placed within 127/128 bytes from the JR-instruction. You also see that there are many typeId values I don’t really use (jumps to go_assert), but that is because the id’s were set to varying ids already, and I did not care to rearrange all tick-objects ids to be grouped. In practice, go_assert is never executed here - it was just a precaution and I could have had a couple of NOPs there instead.

In another game, with a bigger switch/case list, the gains can be much greater.

Note: Self-modifying code is seldom recommended and you should consider if you can use variants like jp (hl) instead, sometimes that is just as fast.

Unrolling and using macros to avoid CALLs, RETs or loop constructs

Unrolling is covered in the VDP section, but it also applies to other instructions like LDI, LDIR, LDD, LDDR etc. Macros can be used as a kind of unrolling as well. Parameterized unrolling, even. If you can afford sacrificing some space for some of the most time critical inner loops or things that run (multiple times) every frame, you can use macros which will just replicate code for you.

In the code below, writeSpriteAttrTableRamToVram will be accessed at the start of every frame when the SAT RAM copy is used to update the real VDP SAT.

|

| Max unrolling. Nested macros, yes, but that’s not the point. |

The writeSpriteAttrTableRamToVramMasksOne (which costs 70 cycles) can be executed in several ways. Either inline as above with 8 repetitions (.rept 8/.endm), or as one of the examples below (assuming there is a ret at the end if it is treated as a routine). Sure, there are even more possible ways to do this, but these examples will suffice:

|

| Using loop constructs and CALL/RET. |

Case 1 will add 347 cycles. Case 2 will add 232 cycles. Case 3 will add 115 cycles. But all use a lot less space than the version I used.

Custom tools and formats

Scripts/tools

vgm2fmr.py

This tool parses uncompressed vgm-files and writes the result in a file format as described in .FMR - FM RAW so it can be used by the replayer described here MSX Music (FM) replayer for the tunes in Part II and in more detail in Music inner workings.

|

| The choices when using the tool. |

As we can see from the VGM format there is a big header at the start of the file. I will check for YM2413 first. I would also ideally check for the Rate-value which is supposed to say either 50 (PAL) or 60 (NTSC), but as openMSX does not store this value, I need to keep track of frequency by other means.

The main idea for the tool is to handle these VGM commands:

- 0x51 - YM2413 reg write

- 0x61 - Wait n samples (in practise x amount of milliseconds elapsed before next reg write)

- 0x66 - End

- 0xa0 - PSG/AY8910 reg write

We do not use the last one for audio, only to mark tag a frame (manually in MoonBlaster) as a loop frame or jump-in frame. When a conversion is done, we can see something like this:

|

| Most info here is not of too much use in practice, but is merely debug data. |

We usually check that the max writes in one frame is not outrageous and that file sizes, etc look ok. The LOOP, JUMP_IN and JUMP_STUB are key tune data added to the source files.

Frame accurate data (facc)

To replay the tune in a game means that you need “frame accurate” data. When parsing the data, you first need to know if it is a PAL or NTSC recording. Then you parse and add up all the milliseconds in wait-values as you go, until a full frame duration has elapsed. That marks one frame. Repeat until the end.

Amongst the tools in the toolset “vgmtools” there is actually a tool called vgm_facc which is supposed to help you with this process. When we run this tool on the files recorded from MoonBlaster and openMSX you get this output:

|

| That is some hefty amount of errors reported. |

To understand this output we need to check the help file:

| Thanks, but no thanks 😢 |

In other words, our source data is catastrophically bad and will introduce jitter if this tool is used. If I go about and do a facc myself using the obvious conversion algorithm, I will get the same results. In practise, we record music that is (highly likely) played once per frame (as if interrupt based), but from the recording, it looks more like this:

|

| Red is active reg-writes, white is no action/wait. Green is the supposed time to replay music in the game. |

We can see that there is something irregular over how the reg-writes are spread over time in the source material. So where does this timing error stem from? MoonBlaster? openMSX? My code? I visited this problem many times over many weeks trying to find out. In the end, I gave up finding the culprit. I just suspected that it was outside my code and hence I just tried to add a fuzzy factor and a simple heuristic in the conversion tool. I introduced a magic number: 300. Because I have seen that, often there are big waits added as the last thing that happens in a frame. So, the parser just looks for waits that are bigger than the magic number, and uses that as an indicator of a frame-separator. The wait may be big and even span over many frames. This algorithm seems to work very well.

Fixing up the data

The tool has several features, like:

- It removes silence at the end of the recording.

- It can manipulate the stream to hide the drum-error described in Handling trouble outside my control.

- It will insert special blocks in stream which informs about the current volume (as mentioned in Fade support).

- It scans for PSG playback and uses that to set memory address for LOOP and JUMP_IN.

- If there is a JUMP_IN address, it analyzes the stream and stores the exact value of all registers at this given point. Register writes for writing all these in a chunk are added to the end of the file at a location called STUB (as mentioned in Loops and jump-ins).

- It will also do certain sanity checking, detect wrong frequency and such.

The above points are best described in more detail by looking at the source.

Visualization

Because of the drumbug and the nightmare caused by the bad timings I made a visualization tool for the data. – Just to understand better. In the beginning I did not use PSG-markers to set loop/jump-in addresses, but calculated them instead, so at that point it was useful to see the visual representation at the location for verification. The output resembles a tracker and looks like this:

|

| K: KEY, S: Sustain, I: Instrument, V: Volume, O: Octave, E: Enable, B/BD: Base, S/SN: Snare, T/TT: Tom-tom, C/CY: Cymbal, H/HH: Hi-hat. +/-/X: ON/OFF/OFF+ON. |

After all the trouble was conquered, the visualization was of little use.

make_level.py

|

| The output from the tool being run 16 times in a row (only the first prints a header). Handy for controlling memory and cycle usage. |

In Tiled, first you need to make a map which has a given size and a grid (we use 8x8 pixels for a tile). Further, Tiled has support for different kinds of layers, and in Lilly’s Saga I use two of them, Tile and Object.

In a tile layer I can place out tiles which will stem from a tileset. An overview of tilesets is found in Tiled and (in our case) is stored as separate json-file(s) which in turn gets referenced by the map-file itself.

The map-file is the source for the make_level tool. As mentioned in Output files from level construction in Part II, the tool parses the json-file and outputs these files:

- LTM (tilemap) ⇒ from Tile layers (Background and Coins)

- AIM (Action Id Map) ⇒ from an Object layer (Objects – location, type and id)

- BCM (Block Collision Map) ⇒ from tileset-json-file that is linked from the map-file

- OAP (Object Action Property) ⇒ from an Object layer (Objects – location, type and id)

- PWS (PathWay set) ⇒ from an Object layer (Pathways)

|

| A 576x22 tile layer. Clean and beautiful. |

To create the BCM-file, we parse the “tilesets”-properties of the map-file:

|

| This map has 5 referenced tilesets. The “firstgid” gives away which tile-ids that belong to a particular tileset. |

The BCM-file is all about some key properties which are 8 properties stored as bit in one byte per tile. They can be seen in Tile codes in Part II, and while parsing the file, it will look like this:

|

| Parse the custom properties and map the names to a bit-value. |

The parsing is as simple as a named string to (bit-) value mapping:

|

| From the python-based tool: Using dictionaries for mapping. |

Below you will see how neatly the objects are stored in the various object layers by Tiled. The make_level tool uses the type, the id, the position and the size. Depending on the type, there will be different custom properties expected. The first one (ExitObject) has something called a target. The second one (CrateObject) does not have any custom properties. The third one (HiddenHitBlockObject) has four.

|

| Objects listed. The second object above differs from the others by having a reference to a tile-id. The crate is shown in the tool by a 16x16 bitmap. |

The last custom field above, of type object and called connect is interesting. If not carrying the value 0, we have created a link between objects using Tiled’s built-in referencing, which in the editor, are shown by dotted lines and arrows as below.

|

| 4 arrowheads in the above screenshot means 4 references between objects. |

In gameplay terms this means: The trigger object will make the referenced and hidden sprite visible. The WarpObject will move the player to the other WarpObject. The RezzControl is part of a ticklist (and gets its code run every other frame) and will place a sprite at the sprite-spawn location at various intervals.

When parsing the hierarchy in the json file, I use even more mapping, like this:

|

| Which properties are expected for each object type, and which types are eligible for being part of the ticklist? |

All objects are read and made ready for being written to a binary file, which the wrldload module can read at runtime. But the tool also uses some logic to make a few other new objects, like the ticklists as explained in Ticklist and reduction of active objects in Part II. The coins which come from a tile layer become AIM-content as well as are being copied into the background tilemap.

The PWS-file with content (thoroughly explained in Pathway rects in Part II) gets its source data from rectangles in a dedicated layer. The contents are both the raw rectangles given in the layer, but also new, combined rectangles calculated from the algorithm below. The algorithm below also creates objects which will tell the gamecode when to use the various rectangles as viewport boundaries.

The tool parses all rectangles and sees how they intersect. I deducted a ruleset, which allows the overlapping variants below.

|

| Most overlap variants are shown here. |

The ruleset says that a maximum of two rectangles can intersect at any given point (where sharing an edge in each direction is allowed). And a rectangle cannot be fully overlapped by another, and the rectangles cannot fully span over the other across one axis each (like a cross).

Based on these rules, I can deduct the right, current, outer and minimal boundary box for the viewport in any given area. If rectangles do not intersect they will indicate separate/isolated rooms or levels.

The algorithm then goes about finding the midpoint on the screen of each side of a crossing edge, and at such a point, a new object is placed. This GeneratedPathwayObject will point to the right rectangle (i.e. viewport boundary) for this screen(s) hereon for the player. The gamecode will be aware of new viewport boundaries throughout gameplay as GeneratedPathwayObjects are ticklist objects, and as soon as their code is run in a frame, they will set their boundaries as the current level boundaries. GeneratedPathwayObjects are part of the OAP-file, and not the PWS-file.

On its way towards writing the files for the game statistics is gathered and a lot of sanity checking is done as well. At the end, the files are written and some statistics are written to the console. See Custom file formats wrt. how the data is stored.

png2sc4.py

I could not find a simple command line tool that converted from png to screen 4 format, so I made my own. What is peculiar with screen 4 is that you can only have 2 colors per line in an 8x8 pixel block (aka a tile). These two colors are the foreground color, and the background color.